Whether you’re streaming live sporting events, esports, news or bi-directional interviews, nothing kills the viewing experience like high latency. Perhaps you’ve watched a soccer game online while your neighbor watches live over the air and you hear them celebrate the winning goal 10 seconds before you see it? Or worse still, imagine watching election results and they appear in your twitter feed before you even get to see it on your TV screen. In these cases, low latency is critical to assure an optimal viewing experience with great viewer interactivity and engagement.

With so many different ways to watch television nowadays, it should come as no surprise that the delay (latency) between when a live event is captured and displayed on a screen will also vary greatly.

From first to last – the journey of live video

There are a number of steps involved in live video broadcasting, between camera glass to screen glass, that contribute to latency. “The first mile” involves capturing content from a camera to an encoder which in turn transmits the video over a network to the production center or hub. The production of live video adds further latency through a variety of operations. These can include switching between multiple live contribution feeds, mixing in commentator audio and video, and adding graphics and closed captions for playout.

Once live video is ready for playout it is then distributed to a variety of delivery platforms, including local terrestrial broadcast stations, cable head-ends, satellite teleports, and cloud-based OTT transcoding services. And finally, the live video content gets transmitted from these delivery platforms to the end-viewer – known as “the last mile.”

Different types of last-mile delivery will have a major impact on overall latency. There are a number of ways that broadcasters and OTT services can manage this latency including the configuration of encoded streams and video players. However, the delivery, distribution, playback, and production stages are all dependent on the first mile – video contribution. In order to best manage overall video latency, it is important to minimize it at the onset without compromising image quality or bandwidth efficiency.

Lowering latency from the start

Every millisecond counts. A second of contribution delay will have an exponential impact on production delay. This is partly due to the fact that multiple video sources, coming from the field as well as in the studio, need to be synchronized in real-time. If one source is delayed then all the others will be impacted, as a larger delay buffer will be needed to keep them in sync.

In addition, contribution latency also impedes the reaction of live television producers when switching between incoming streams. As highlighted by Robert B. Miller of IBM in 1968 in his now classic white paper Response time in man-computer conversational transactions, research shows (and this still holds today) that humans perceive latency of under 100ms as immediate and enable an instant response to incoming low latency information. Any latency above this threshold will impede and further delay live broadcast production and have a domino effect on distribution and last mile delivery.

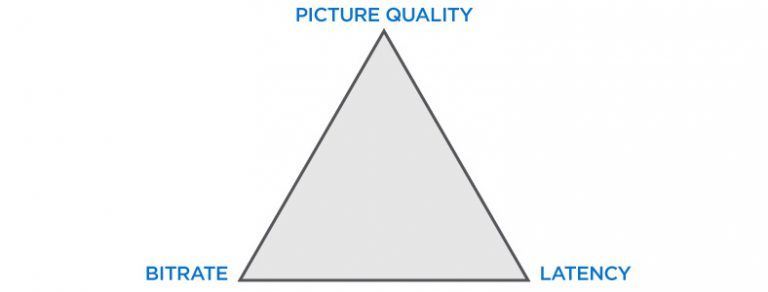

Balancing high quality and low latency

Minimizing first mile latency is therefore critical for live video broadcasts. However, it’s also important that picture quality remains high. You don’t want the image to be unusable by post-production and playout. Poor quality contribution video can lead to unwatchable video at the receiving end once it passes through the multiple stages of video processing that make up a live production workflow.

Some contribution encoders are designed for providing very high picture quality by minimizing compression levels, if at all, but require dedicated fiber or satellite links. When the first mile is over a standard internet connection however, then the challenge is in compressing video to low bitrates while maintaining high picture quality. Complicating matters further is the fact that sophisticated video codecs such as HEVC, designed for high picture quality over low bandwidths, involve greater amounts of processing power than MPEG-2 or H.264 and therefore additional latency.

Haivision’s Makito encoders have been engineered and designed to keep latency as low as possible, under 55ms in some cases. Haivision’s dedicated hardware encoding engine is able to maintain low levels of latency even with HEVC while delivering high quality video at extremely low bitrates down to under 2 Mbps.

By delivering a combination of bandwidth efficiency, high picture quality, and low latency at the first mile, last-mile viewers can enjoy a great live experience over any network – with no spoilers.

“Editor’s Note: This post originally appeared on Haivision.com and was republished with permission. You can see more blog posts from Haivision here.”